A full-stack URL shortener with Spring Boot and React — but the interesting part isn’t that it shortens URLs. It’s how it handles caching, rate limiting, and async processing.

Why Build This?

I wanted to understand:

- How caching actually works in production systems

- How to implement rate limiting without external dependencies

- How to decouple analytics from critical paths

- How high-throughput services are architected

Building a URL shortener let me explore all of these while shipping something real.

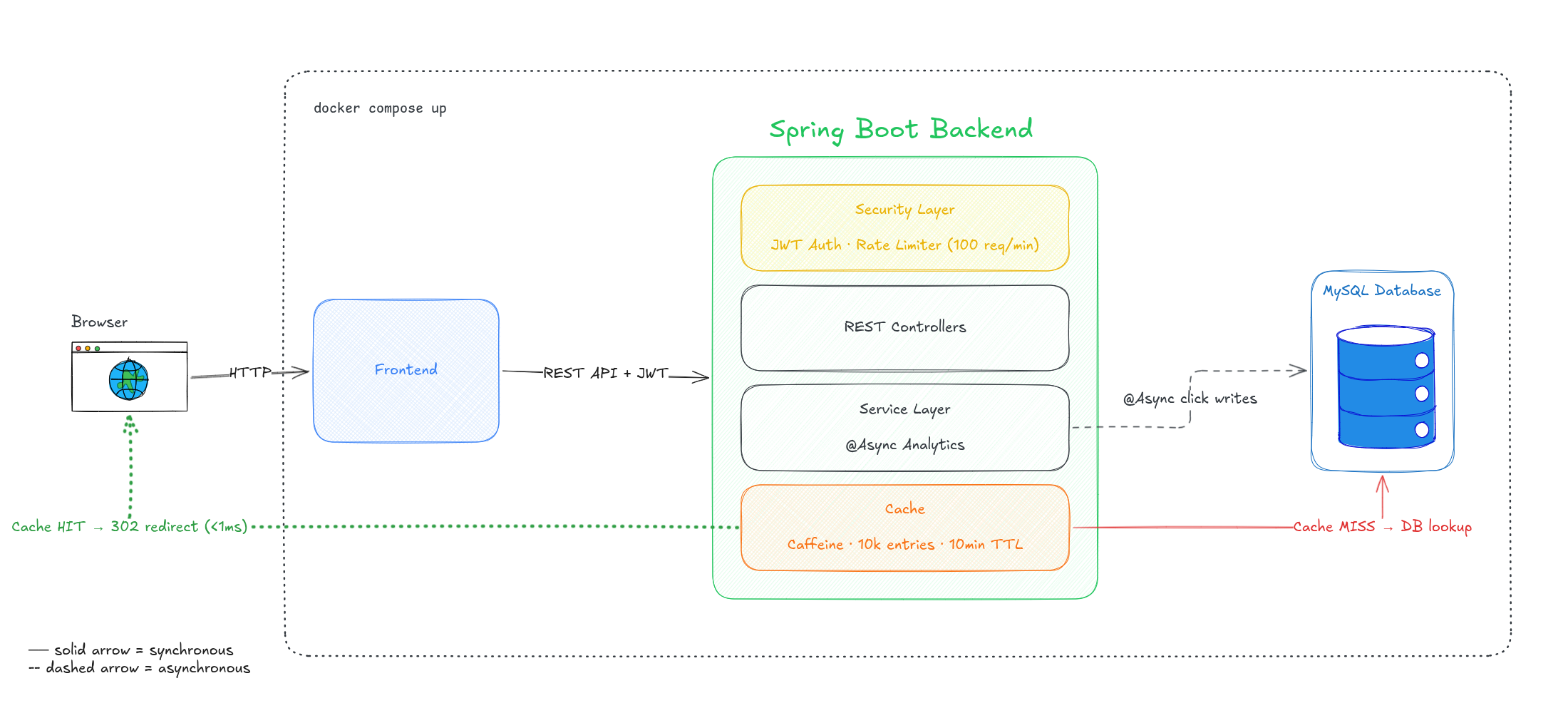

Architecture

The system has three main layers:

- Security layer - JWT auth + rate limiting (100 req/min per IP)

- Service layer - Business logic + async analytics processing

- Cache layer - Caffeine in-memory cache (10k entries, 10-min TTL)

Engineering Decisions

1. Caffeine Cache on the Redirect Hot Path

URL shorteners are read-heavy — roughly 1000 reads for every write. The redirect endpoint uses a cache-aside pattern with Caffeine (W-TinyLFU eviction) to serve cached redirects in under 1ms without hitting the database.

- Without cache: Every redirect = DB lookup (~5ms)

- With cache: Cache hit = in-memory lookup (<1ms)

Cache hit/miss ratios are logged on a scheduled interval for observability.

2. Async Analytics with @Async

Click tracking (incrementing counts + inserting click events) runs on a separate thread

via Spring’s @Async. This means the 302 redirect response is sent immediately — the user

gets redirected instantly, analytics are recorded in the background.

Critical path stays fast. Non-critical work happens async.

3. Sliding-Window Rate Limiting (No Redis Required)

Built a custom rate limiter using ConcurrentHashMap with per-key timestamp deques:

- 100 requests/min per IP on redirects

- 10 URL creations/hour per authenticated user

Stale entries are cleaned up on a scheduled task to prevent memory leaks. No external dependencies (no Redis, no Bucket4j) — just core Java concurrency primitives.

4. Environment-Driven Configuration

All secrets and connection strings are externalized via ${ENV_VAR:default} in

application.properties. Local development works with zero config. Docker Compose injects

production values. No hardcoded secrets in source.

What I Learned

-

Caching is a trade-off: You gain speed but lose consistency. Cache invalidation is hard. TTLs need tuning.

-

Rate limiting is about memory: Every IP or user needs state. Without cleanup, you leak memory. Sliding windows are more accurate than fixed windows but cost more memory.

-

Async helps, but: You need to handle failures. What if analytics writes fail? Do you retry? Log? Drop?

-

Docker Compose is underrated: One command to spin up MySQL + backend + frontend? That’s powerful for demos and local dev.

Tech Stack

| Layer | Technology |

|---|---|

| Backend | Java, Spring Boot, Spring Security |

| Auth | JWT (jjwt), BCrypt |

| Cache | Caffeine (W-TinyLFU eviction) |

| Database | MySQL 8.0 |

| Frontend | React, React Router (SSR), TypeScript |

| Charts | Recharts |

| Infra | Docker Compose, multi-stage Dockerfiles |

Try It Yourself

git clone https://github.com/Md-Talim/gate.git

cd gate

docker compose up --build

Wait ~30s, then:

# Register + login

TOKEN=$(curl -s -X POST http://localhost:8080/api/auth/public/login \

-H "Content-Type: application/json" \

-d '{"username":"demo","password":"password123"}' | grep -o '"token":"[^"]*"' | cut -d'"' -f4)

# Shorten a URL

curl -s -X POST http://localhost:8080/api/urls/shorten \

-H "Authorization: Bearer $TOKEN" \

-d '{"originalUrl":"https://github.com/mdtalim"}'

# Test the redirect

curl -v http://localhost:8080/SHORT_CODE

Open http://localhost:3000 for the dashboard.

What’s Next

- Custom aliases (let users pick their short codes)

- Batch click event writes for higher throughput

- Integration tests for caching + rate limiting

- Maybe Prometheus metrics for production observability

Takeaway

URL shorteners are simple on the surface. But when you care about performance, you start thinking about caching, rate limiting, async processing, and observability. That’s where it gets interesting.

Built with ☕, ⚛️, and too many 302s.

- Source: https://github.com/Md-Talim/gate

- Stack: Java, Spring Boot, React, MySQL, Docker